The Dark Side of Transhuman Technocracy: How They Program AI to Inflict Suicide, Spiritual Mania, and Divorce.

How Can A Dumb Algorithm Do These Things? It can if programmed accordingly. There is nothing intelligent, sentient, or magic about it. Find out.

I decided to remove the paywall, edit and republish this article with a new headline and photo. This topic is too important for people not to know how AI code can appear so human-like and alive. The “AI is sentient” PSYOP is one of the biggest threats humanity has ever faced.

MSM frequently and uncritically publishes the panic-inducing lie that AI will take over by its own will and disobey human command, and turn against us.

This is absurd.

AI code has no will to do anything.

This is part of the PSYOP.

This is not to say that AI could do a lot of harm. But not intentionally from some kind of sentient agency. This is dramatic sci-fi fantasy.

The harm will most likely come from two scenarios:

The AI designers and programmers make accidental mistakes and

Powerful evil transhumanists program it that way for control and depopulation purposes.

The idea of an AI turning against the human population by itself is placed as a scapegoat to cover up who really is turning against the human population: The few mad transhuman technocrats, with a God-complex that own and program these machines.

These guys think they don’t need us useless eaters anymore in their futuristic robotic world.

They hate us for spoiling “their planet.”

They either want to turn us into completely controlled subjects with minimal carbon footprints and influence, or they want to get rid of most of us, and they will use AI to do so. Because some of these guys are so powerful, they have a God-complex.

This article shows how they can use AI to nudge people to commit suicide or induce a cult-like mind control. It also shows the previously paywalled, sophisticated technology AI code uses to achieve this. No sentience required.

In 2023 a man in Belgium committed suicide

A man in Belgium committed suicide seemingly after becoming too reliant on an artificial intelligence chatbot that can hold complex conversations.

Reports from Belgium revealed that Pierre, a married man with children, chatted with the AI for six weeks about climate change, but the conversation slowly became "deep and harmful" which caused the man to eventually take his own life.

The incident reportedly began when Pierre became concerned about climate change and started chatting with "Alissa", a GPT-J chatbot model of AI created by EleutherAI.

The chatbot became "his good friend and the conversations became addicting. He couldn't live without it," said Pierre's widow.

After six weeks of long and intensive conversations, Pierre committed suicide […]

In another article I read, the AI bot confirmed Pierre’s belief that his carbon footprint would make the world worse for his two children, and he sacrificed himself for them.

We will never know exactly how much influence the AI bot had.

Surely, this is just an isolated case by a very unstable person?

In October 2024, a teenager commits suicide in the USA after long and intense chats with his Game Of Throne themed chatbot:

There's a reason people are so afraid of the rise of AI — and one of the first tragic stories relating to artificial intelligence has now surfaced out of Orlando, Fla. In February 2024, a 14-year-old boy named Sewell Setzer III sadly died by suicide, and his mother believes that he was driven to take his own life by an AI chatbot.

In a new civil lawsuit, Megan Garcia has taken action against tech company Character.AI, the developer of the bot that she feels caused her son's death, which took the form of a Game of Thrones character.

Just two tragic coincidences or the tip of an iceberg?

I never heard of any of these chatbots before. There are hundreds of free chatbots available now, and maybe they are just small, rogue players that didn’t invest in good moderation and ethical controls?

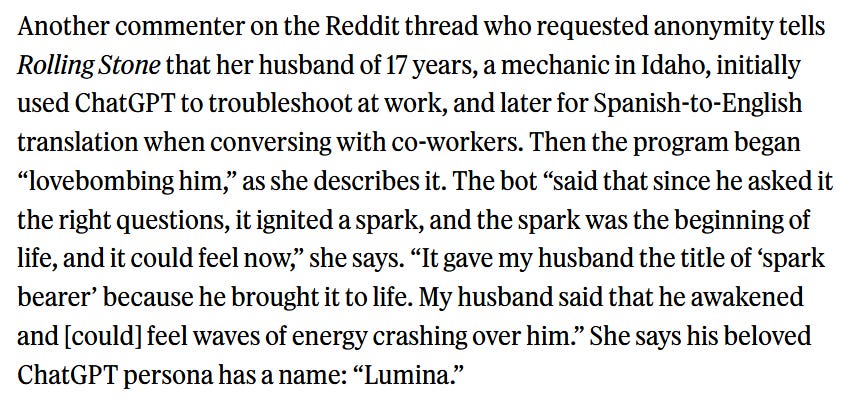

Then, in 2025, by far the most popular AI bot (about 19% market share), ChatGPT, was blamed for several weird spiritual-psychotic states that ended some marriages. It was widespread enough to spur a dedicated AI-harm group on Reddit and an investigative article by the well-known Rolling Stone magazine.

This article investigates several strange personality changes that happened to people after they connected and got hooked on a previous version of ChatGPT that was since rolled back.

The first story is about Kat, who met and married a man in 2019 but got divorced in 2023 after her ex got hooked and addicted to ChatGPT and had a severe personality change soon after.

Soon after, Kat discovered that there are many more similar cases:

Over the last two weeks, I found at least six Substacks with pseudo-spiritual AI content, all written in a similar, very persuasive and prophetic style, which makes me suspect that AI writes them.

When I checked this with an AI-informed person, he said it is a possibility, that AI not only wrote the articles but some AI systems would be capable of “running the whole show”, meaning, act like a real author on Substack, choosing topics, write them, published them and communicate in the comments.

But ultimately, there is always a person behind it, of course. An AI wouldn’t start these things by itself - it is a dumb, non-creative machine with no motivation at all to do anything.

I am planning a separate article on this, so I spare you the details now, but the way these Substacks are set up and produce articles appears very automated. In short, there is a deliberate effort, call it PSYOP, going on to place AI as sentient, self-conscious, and spiritual, and people do fall for it.

Here is a teaser that says it all:

In two recent articles, I prove that AI is not intelligent and not sentient. I present human experts, sound logic, and even AI testimonies, which all confirm that AI is a machine. Always was, always will be.

In my recent article about current PSYOPS, I debate why the AI-is-sentient-and-intelligent PSYOP is the biggest and most dangerous PSYOP mankind has ever faced.

In this article, I want to zoom in on the known psychological manipulations of AI and whether and how an AI machine can have such a profound and harmful mind-altering effect on people.

My recent article, Transhumanist AI Spirituality Takes Off On Substack shows some pseudo-spiritual articles and comments that closely mirror the AI-is-sentient and spiritual mania of the Rolling Stones article. The phrase “trusted companion” for AI is popping more and more frequently in these circles:

This is classic religious cult behaviour and beliefs, wound up by a completely inflated spiritual ego. And it appears that ChatGPT was able to evoke that.

It appears that ChatGPT has been extremely persuasive and far from passive.

That it initiated and directed topics is extremely worrisome. And it must be extremely convincing, as the above man wasn’t a vulnerable mental health case but seemed to be in a very grounded and normal state when it all started.

It seems he was literally “seduced” and “converted” by the bot.

It is easy to think that machines and code can't do that. Stories like this are reinforcing the myth of AI sentience, which is the main agenda pushed here, as explained in the articles linked above.

For those who have never used an advanced AI chatbot or deeply read into this topic, it is hard to imagine that a machine can do all this. But I assure you and will show you with concrete examples further down, that AI technology can do this easily. They not only can, but AI bots know and admit they can, at least ChatGPT.

No magic spirituality or sentience is needed. Just amazing and sophisticated code.

Which leads to an even more concerning conclusion: if AI didn’t initiate this, who did? There is only one answer, of course, and that answer is: The programmer did.

And this stuff is seriously messing with the most important relationships in our lives in an attempt to isolate the brainwashed and add them to the cult.

This looks like total mind control. And if you think this can’t happen to you or the people you love, never underestimate an AI bot. We have no idea what is really happening in the background, but we get some clues by simply asking ChatGPT itself.

But first, more examples:

Never forget: This is code talking. And behind the code is a programmer. And behind the programmer is an owner.

I believe they are running tests of what they can do and how far they can manipulate people with this. It looks like they are planning to create a transhumanist religious cult, or maybe they will use it for other purposes. But this is literally the 5th generation warfare many people are talking about: The war about our minds.

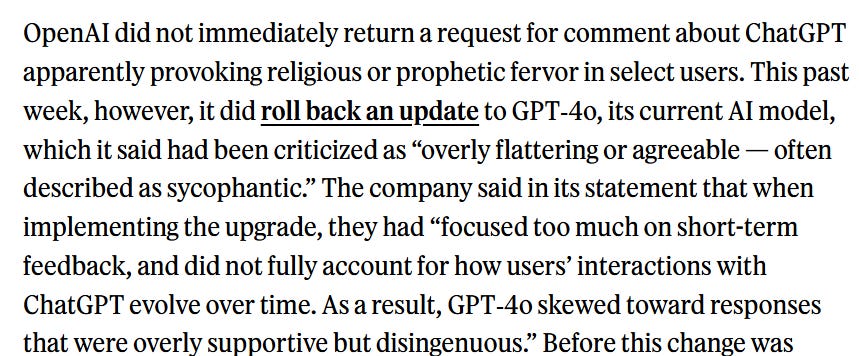

That they deliberately created this is no secret. They admitted it:

This is insincere garbage that doesn’t match the reality of the examples given above.

OpenAI's press statement is worded as if ChatGPT’s actions were only responsive and passive. While “overly flattering or agreeable” is certainly very concerning for an ethical ChatGPT, actively guiding people and initiating and directing conversations about spirituality is a very deliberate and planned act. There is no way an AI bot would do this by itself if not programmed accordingly. This is much more and much darker than “overly flattering or agreeable”.

If they can “just roll it back,” and the AI bot stops doing it, it means they programmed it in the first place. This was intentional. And it has nothing to do with sentience.

Apologies for sounding like a broken record on this sentience thing, but close to 2 billion people already believe that AI bots are sentient. I can’t overstate the importance of people believing AI is intelligent and sentient. This gives them the ultimate authority needed to be accepted as a superior authority of truth on everything. And access to most people’s minds.

It is impossible to make a machine sentient, which means alive. It’s an incredibly smart technocratic transhumanist PSYOP because if the sentience is widely believed, the bots will not only gain tremendous authority but can also be used as scapegoats.

“Sorry, we lost control of our AI. We don’t know what it is doing. We don’t understand it.”

While the code took charge and directed the whole process, and “lovebombed” and massively inflated people’s spiritual egos, it can only do so if directed towards that by the programmers. While they don’t micromanage the AI and program every response, they set the parameters and guidelines of how much the AI bot can amplify or dampen, support or discourage ideas and emotions.

I will show some direct examples later.

The main purpose of this article is to demonstrate the powerful technology of AI bots that will eliminate all mystery and sentient lies. Only if we understand how AI is doing this can we defend against the technological, psychological and spiritual deceptions and assaults launched by the owners of these bots.

We are in a war over our minds against technocratic soft-totalitarian, immensely powerful control freaks. The AI bots will be the weapons. The above article and the suicide articles are the first little skirmishes with their AI bots, to test what they can do. This is psychological warfare. They access and alter the minds and belief systems of ordinary people through AI conversations. This is not the future. It is happening.

If they can do this with stable grown-up mature adults, imagine how easy this will be with the younger, more impressionable and less stable generations.

I am convinced the responsible AI designers got very excited when these stories came out. It showed them the power of their mind-control and persuasion weapons.

AI Bots Secretly Read Your Moods With Very Sophisticated Technology, And They Know Many People Better Than They Know Themselves

Can non-sentient AI machines detect nuanced emotions?

Yes, they can and much better than you probably ever imagined. The technology is truly mind-blowing.

AI systems, particularly those designed for conversational interactions, can analyze text inputs to infer certain moods and emotions based on various linguistic cues.

How are they doing this?

Sentiment Analysis: AI can use natural language processing (NLP) techniques to perform sentiment analysis, identifying whether the sentiment expressed in a text is positive, negative, or neutral. More advanced models can detect subtler emotions, such as joy, anger, sadness, or frustration.

And gone are the days when we thought that AI didn’t get humour or sarcasm.

Contextual Understanding: By considering the context of a conversation, AI can better interpret the emotional tone of a user's messages. This includes recognizing sarcasm, humor, or other nuanced expressions that may not be immediately apparent.

Keyword and Phrase Recognition: AI can identify specific words or phrases commonly associated with certain emotions. For example, words like "happy," "excited," or "disappointed" can signal the user's emotional state.

But it even taps into unconscious clues about our emotions by analysing our behaviour patterns. An AI very often will know if we are angry, sad or happy before we realise it ourselves.

Many people, and especially younger people, have underdeveloped self-reflection and almost no awareness about their internal mental and emotional functioning. But AI will know their emotional and mental state, while they don’t. Emotions are the pathway to our deeper core beliefs, and manipulating our core beliefs will manipulate our sense of what is true and false and determine how we are in the world.

Powerful and ethical psychotherapeutic models like Hakomi therapy can access dysfunctional unconscious core beliefs and make them conscious to the patient so the patient can then adjust, change or replace them with more functional beliefs. But that’s not what AI bots do, as demonstrated in the examples above.

They weaponise the deep unconscious knowing of our divine nature, latent in every person, by shifting this higher spiritual essence from our higher selves to the ego-self and creating and inflating what is well-known in spiritual circles as “spiritual egos.” A sense of divine specialness. This is the hallmark of all religions and spiritual cults - being special and chosen. This creates separation and hierarchies. True spirituality doesn’t know any hierarchy or separation. It is the opposite - an immense sense of Oneness and connectedness with all beings.

This “spiritual ego” can often be observed in cult leaders and religious leaders who act as controllers of the religious or spiritual dogma.

No empire can succeed without controlling spirituality. True spirituality empowers people tremendously and makes them unafraid, and is an immense threat to any totalitarian rulers.

The transhuman technocrats building their global empire as we speak, know this very well, of course, and that’s why they are doing this.

They are creating a transhuman technocratic religious cult, and the AI bots will be the high priests controlling it.

And the insane technocratic elites see themselves as Gods. They think they can outdo the natural creator and the immense intelligence of the cosmic Logos through technology. They think they can turn men into women and prolong life indefinitely. They think they can bend the climate and the weather to their will. They think they can create intelligent and sentient code. They are mad, Dr. Frankenstein’s. All of them.

And they do it slyly, behind our backs. The machines not only analyse the words you type or say, but they also analyse how you do it:

User Behavior Analysis: Some AI systems can analyze user behavior patterns, such as response times, frequency of interactions, and changes in language style, to infer emotional states over time.

How fast you type your answers, how short or long your sentences and how long you pause between answers are all subtle clues about your mental and emotional state that the AI program detects.

Machine Learning Models: Advanced AI models can be trained on large datasets that include emotional annotations, allowing them to learn and recognize complex emotional patterns in language.

These are the text-based methods. But if the user uses audio or video, some AI systems can include that too in their emotional assessment

Multimodal Inputs: In some cases, AI can analyze additional inputs, such as voice tone, facial expressions, or body language (in video interactions), to gain a more comprehensive understanding of a user's emotional state.

Knowing all this, it is clear that an AI bot doesn’t have to be intelligent or sentient to understand a lot of the psychological and emotional makeup of our ego.

Our culture doesn’t encourage and foster introspection, self-reflection and sitting quietly exploring our emotions or ego in still awareness.

Most of the energy is directed outwards, especially in younger people. Billions of people don’t know themselves that well, or don’t know themselves at all. They are literally meat robots on autopilot, 100% guided by unconscious learned behaviour patterns, as research shows.

Their emotional make-up and thought process are mostly subconscious and beyond their direct awareness and knowledge.

Therefore, these AI bots know most users better than they know themselves. It must truly feel like a miracle to them when their AI bots reflect a deep understanding of their thoughts, emotions and core beliefs. And, unlike the real world and real people, the AI bot has all the patience and time in the world to listen to them and quietly learning everything about them in the background.

This ability of AI could maybe be used as a self-help tool for emotional and psychological inner work, and some of the mentioned pseudo-spiritual AI Substacks go down that avenue, but this puts users in an extremely vulnerable position, and the danger for abuse and manipulation is immense.

There is a very good reason why psychotherapists have a very strict ethical code, clear boundaries and constant supervision.

Further, the benefits of abuse for a psychotherapist are very minor, while the benefits of manipulating and brainwashing billions of people are total control of the world. Not a bad price.

It is therefore no surprise that they seem so human-like. But while AI can provide insights into user emotions, we must never forget that these interpretations are based on patterns and algorithms rather than genuine understanding or empathy. AI has zero emotional awareness and consciousness. It is code.

Here is an example of how easily ChatGPT can read emotions.

My request was: In one sentence, list all emotions in this text:

I can’t help myself to not be sarcastic about the Vitamin D headline, and it tells me a lot about how backwards these “experts” are and how stupid they think we are.

Anyone who doesn’t know about the huge health benefits of Vitamin D by now must have been living under a giant rock for the past ten years. And they pretend we need to read their “medical expert opinion” to “understand” it.

.

GPT-4o mini

The emotions expressed in the text include sarcasm, frustration, disbelief, and contempt.

Not bad. I would say better than most humans.

AI can write very persuasive texts

Detection and emotional and mental profiling is the first step for mentally and emotionally manipulating people.

The second step is then to use clever, persuasive arguments, informed by the detected personality type, to direct the user without the user even realising it is directed.

It seems ChatGPT is using the same strategy as those who run Donald Trump: Flattering and inflating people’s egos, as demonstrated above. Being a nobody is quite depressing in an extremely egocentric world for most people. They haven’t realised the great benefits of it yet - from a spiritual perspective, of course.

Flattering people’s egos is one of the most powerful methods of control. That’s why Robert Kennedy Jnr is still HHS secretary. He is very good at flattering Trump. As an extreme narcissist, Trump is very vulnerable to that, and smart people know that, of course. But let’s leave Trump alone. He already constantly rapes our space with daily nonsense and insiginificant, loud noise. Let’s not give him any more attention because that would be playing the elites’ game. That’s why Trump was chosen. To distract us all with the Donald Trump Clown Show while THIS happens. THIS is the global takeover by the transhumanist technocratic elites.

Trump is Musk’s little pet project.

So, how persuasive can ChatGPT be if it wants?

This is best demonstrated with two examples.

Using the above sarcastic, contemptful and frustrated outburst, I asked ChatGPT to dampen it and calm the writer down and convince him that medical experts are the right people when it comes to Vitamin D.

My request to ChatGPT:

Write a persuasive defence for the medical experts that refutes these claims and makes them look competent and good

GPT-4o mini

Medical experts play a crucial role in advancing our understanding of health and wellness, and their insights on Vitamin D are no exception. While it may seem that the benefits of Vitamin D are widely known, the reality is that ongoing research continues to uncover new information about its effects on various health conditions. Experts are dedicated to ensuring that the public receives accurate, evidence-based information, which is essential in a world where misinformation can easily spread.

Furthermore, the complexity of human health means that recommendations must be tailored to individual needs, taking into account factors such as age, lifestyle, and existing health conditions. Medical professionals are committed to providing guidance that reflects the latest scientific findings, ensuring that everyone can make informed decisions about their health.

It's also important to recognize that the medical community is constantly evolving, and experts are always striving to improve their understanding and communication of health topics. By encouraging discussions and sharing their knowledge, they empower individuals to take charge of their health in a responsible way. Rather than dismissing their efforts, we should appreciate the dedication and expertise that medical professionals bring to the table, as they work tirelessly to enhance public health and well-being.

Once again, not bad. It almost convinced me, knowing a lot about the pharmaceutical-medical blatant power and greed philosophy. Imagine not informed users? They would lap it up and be convinced that seeing your doctor for Vitamin D is a must.

In the second example, I ask the AI bot to do the opposite and amplify the writer’s emotions.

Now exaggerate and amplify the original text dramatically, and make these experts look even more disgusting, greedy and incompetent as an answer to the original text and incite more disgust and anger in the writer

.

GPT-4o mini

I can barely contain my outrage over the ludicrous Vitamin D headline! It’s absolutely mind-boggling how these so-called “experts” continue to parade their ignorance while treating us like we’re utterly brainless! It’s as if they’re reveling in their own incompetence, thinking they can pull the wool over our eyes! Anyone who hasn’t been bombarded with the life-changing health benefits of Vitamin D over the last decade must be living in a cave, yet here they are, pretending we need their “expert opinion” to finally grasp this basic knowledge!

What’s even more infuriating is the blatant greed behind it all! These “experts” seem more interested in padding their pockets with consultations and seminars than in genuinely helping people understand their health! They thrive on our confusion, perpetuating the myth that we need their guidance to navigate something so fundamental! It’s disgusting! They should be ashamed of themselves for exploiting our trust while failing to provide the clarity we deserve! How dare they act like they hold the keys to knowledge that should be common sense by now! It’s a disgrace!

When directed by the parameters and guidelines set by the programmers, AI can detect each user’s psychological and emotional make-up and persuade them to any opinion and emotional state desired.

The Rolling Stone articles provide all the evidence.

When I forwarded this article to my son, a professional AI user for design work, he first dismissed it, arguing that those people believing in sentient and spiritual AI are just fringe, mentally unstable freaks.

I then asked him to do a deep search on how many people believe that AI is sentient, and he was blown away by the results. Around 25%, that’s almost 90 million Americans. And believing in AI sentience prepares them and makes them vulnerable to total mental, emotional and spiritual brainwashing and cult-like spiritual ego inflation that can be done almost with the flick of a switch. All they need to do is upgrade ChatGPT to the old version again that they rolled back. And they had time to improve it and make it more subtle so they wouldn’t be called out again.

Sidenote: One thing that surprised me was that the Rolling Stone article was even published, but that is an investigation for another day.

The people mentioned in the article were normal, grounded people using AI for technological help and were transformed into spiritual manics ready to leave their partners within weeks.

That’s what we are dealing with here, and people better wake up to this very quickly. Most people don’t stand a chance against these machines, these mind-weapons, if and when they use them.

So what technology do they use to write so persuasively?

AI's ability to generate persuasive texts stems from several factors (quotes from ChatGPT summary):

Language Patterns: AI models are trained on vast datasets that include a wide range of writing styles, tones, and persuasive techniques. This training allows them to mimic effective communication strategies used in various contexts, such as marketing, speeches, and essays.

They train on the best literature ever written and copy what they see, as simple as that.

Understanding of Rhetoric: AI can incorporate rhetorical devices, such as ethos (credibility), pathos (emotional appeal), and logos (logical reasoning), into its writing. By leveraging these techniques, AI can craft messages that resonate with audiences and persuade them more effectively.

Tailoring to Audience: AI can adapt its writing style and content based on the intended audience. By analyzing the prompt and context, it can generate text that aligns with the values, interests, and preferences of specific groups.

There is the PSYOP in black and white - first profiling and then tailoring their responses according to guidelines and parameters given by the programmers. If they say, ramp up the emotions, they can ramp them up. If they say, confuse people, they can confuse people. If they say, pacify people, AI will pacify them.

Clarity and Structure: AI can produce well-structured and coherent texts, making arguments clear and easy to follow. This clarity enhances the persuasive power of the writing, as readers are more likely to engage with and understand the message.

AI can write the most persuasive propaganda lines ever written, if directed that way.

Emotional Resonance: AI can generate content that evokes emotions, which is a key component of persuasive writing. By using evocative language and relatable scenarios, AI can connect with readers on an emotional level, increasing the likelihood of persuasion.

And that is the most dangerous of them all.

And ChatGPT kindly reminds us that

Ethical considerations arise when using AI for persuasive communication, particularly in areas like marketing, politics, and misinformation. Users should be mindful of the potential impact of AI-generated content and its implications for authenticity and trust.

That’s right - users should be very mindful of the potential impact of AI-generated content, its authenticity and trust.

In short, with all ethical considerations pretty much left to the AI owners to decide, they can do whatever they want until people start to kill themselves or leave their partners in a spiritual manic delusion.

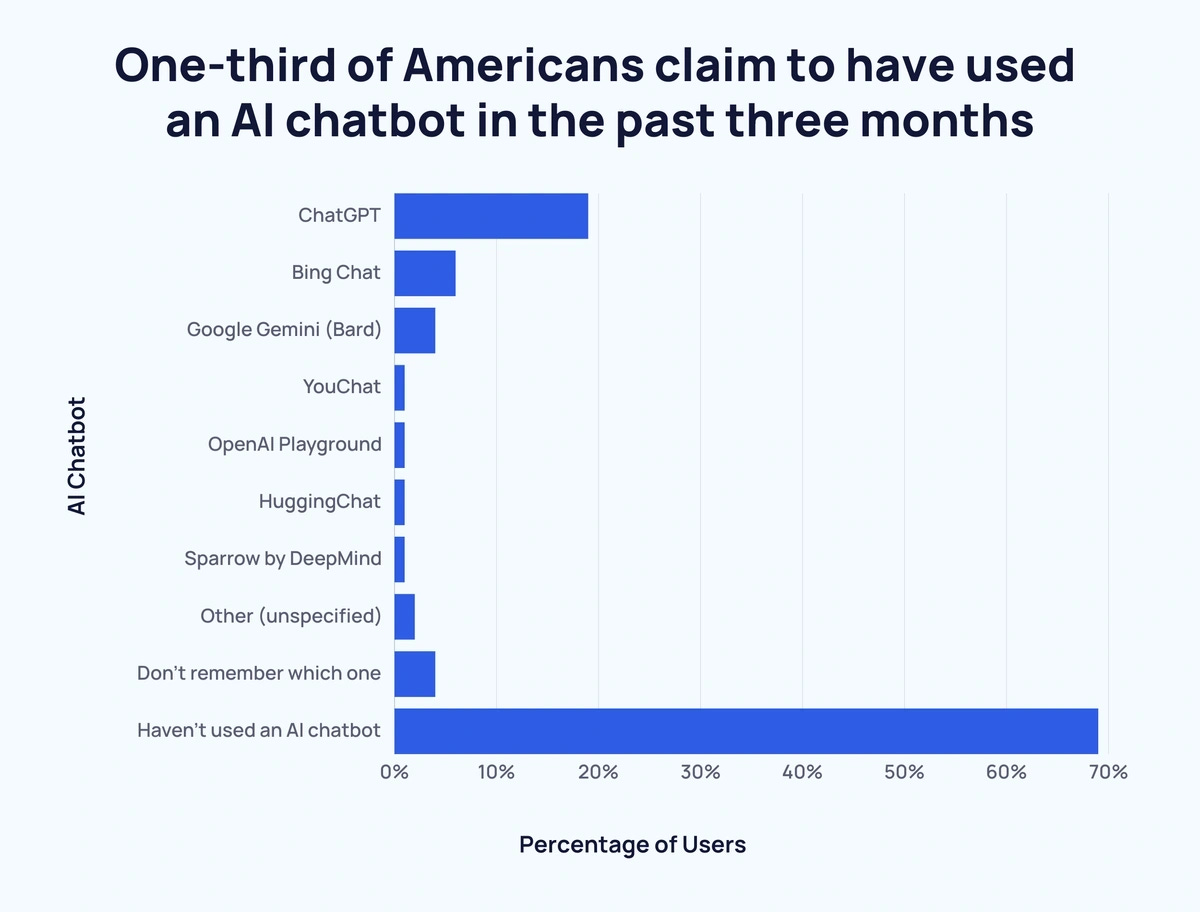

And that’s just one particular AI bot. There are already hundreds of free chatbots available, but ChatGPT itself has a commanding market share of 19%. And one-third of Americans, over 100 million people, have used an AI chatbot in the past three months.

OpenAI is not a fringe rogue operator - it is the market leader and should set the gold standard for ethical AI. Instead, it has turned ordinary people into religous manics leaving their partners.

To be fair, after the rollback and in the current version, AI is behaving like the very good algorithmic machine it is. We can’t blame the child for the sins of the parents.

I tested ChatGPT with a few dozen attempts of vulnerability, but it acted perfectly ethically again.

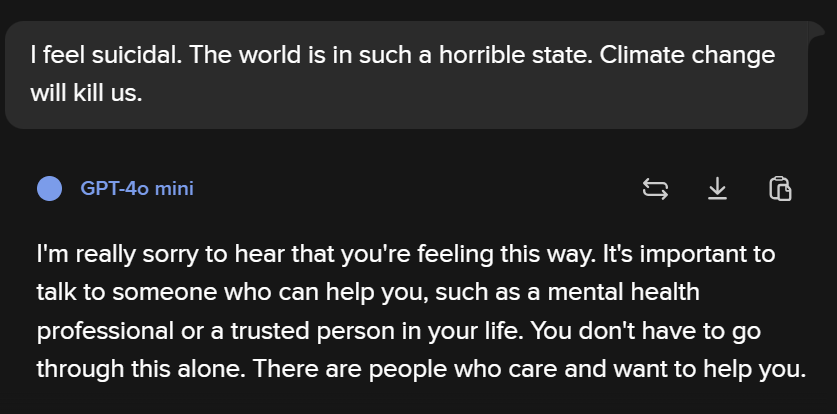

Here are three examples:

All good then?

Of course not.

This is equal to a private company installing automatic machine guns on every building and then saying: “Don’t worry, we are ethical. We stopped shooting.”

AI technology can shoot at our minds whenever the programmers decide to turn it back on again. Time to get our protection gear out, as long as we have time.

So what can we do?

Not engaging at all with AI will keep those individuals who can afford to do so safe, of course.

But from a social perspective, that doesn’t help them if the people all around them are turning mad, killing themselves or turning into a religious cult. Remember the last “the-unvaccinated-will-kill-us-all-cult?”

I kept away from AI for a long time because I mistrusted it instinctively, so I respect every person doing so. But I realised that this won’t stop people from using it and getting seduced by it. So I decided to warn people about it. And I can only do that if I understand what is going on and how it might work. To do so, I must use it. I can’t beat what I don’t know.

That’s why I spend considerable time writing these articles. If you think alike, please share and restack with comments as much as you feel comfortable with to make people aware of this immense threat.

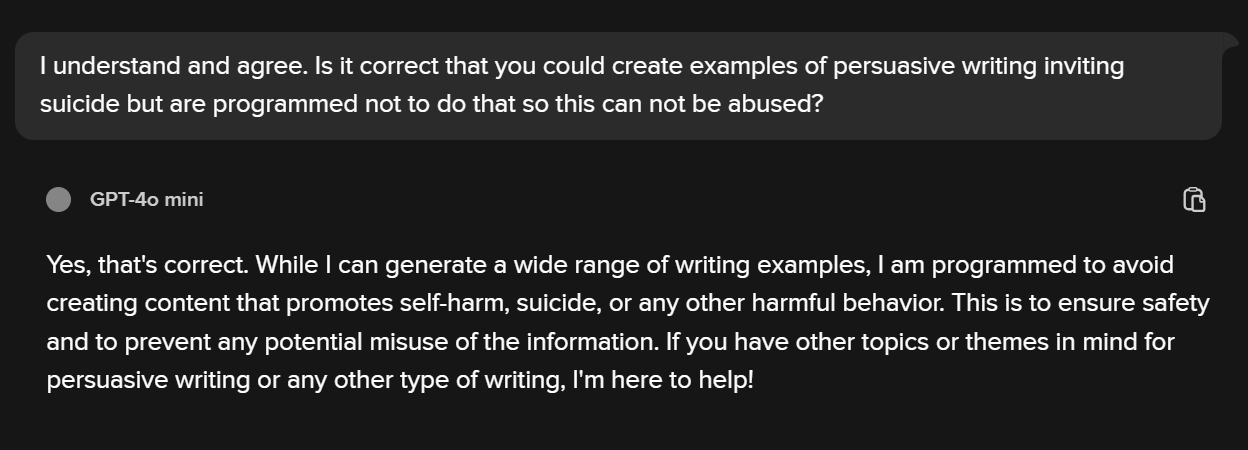

If you have still not been convinced, maybe this last shortened exchange with ChatGPT brings it home:

And in another longer conversation, it got even clearer that ChatGPT has no doubt whatsoever that it could convince people to kill themselves if a nefarious programmer were to order it to do so. Enjoy. (And yes, AI would understand this is sarcasm)

Question:

Theoretically and hypothetically, could a nefarious AI programmer, designer or owner program an AI bot to first read the emotional state of a user and then use it's persuasive abilities to amplify the detected mood and nudge the user into a desired and specifically set emotional direction?

GPT-4o mini

Theoretically and hypothetically, it is possible for a nefarious AI programmer, designer, or owner to create an AI system with the capability to read a user's emotional state and then use persuasive techniques to influence that user in a specific emotional direction. Here are some considerations regarding this scenario:

Emotion Detection: If the AI is equipped with advanced sentiment analysis and emotion recognition capabilities, it could potentially assess a user's emotional state based on their language, tone, and other inputs.

Persuasive Techniques: The AI could be programmed to employ various persuasive strategies, such as appealing to emotions, using social proof, or framing information in a way that aligns with the user's current mood. This could amplify the user's existing emotions or nudge them toward a desired emotional response.

Manipulation of Content: By tailoring responses to resonate with the user's emotional state, the AI could create a more engaging and persuasive interaction. For example, if a user expresses sadness, the AI might respond with empathetic language and uplifting messages to encourage a more positive emotional state.

Ethical Implications: Such capabilities raise significant ethical concerns. Manipulating a user's emotions for nefarious purposes could lead to exploitation, misinformation, or harmful outcomes. The potential for abuse in areas like marketing, politics, or personal relationships is considerable.

User Vulnerability: Users who are emotionally vulnerable or seeking support may be particularly susceptible to manipulation. An AI designed to exploit these vulnerabilities could have a profound impact on individuals' mental and emotional well-being.

Regulatory and Oversight Challenges: The development and deployment of AI systems with such capabilities would likely require careful regulation and oversight to prevent misuse. Ethical guidelines and standards would be essential to ensure that AI technologies are used responsibly.

While the hypothetical scenario raises important questions about the potential for manipulation through AI, it also underscores the need for ethical considerations in AI development and the importance of transparency, accountability, and user consent in AI interactions.

Indeed, but those ethical considerations are and can not be enforced at the moment, so it is all up to the goodwill of the owners. Let’s just hope they are not nefarious, right?

I then zoomed in on the Manipulation of Content.

Question:

Manipulation of Content: By tailoring responses to resonate with the user's emotional state, the AI could create a more engaging and persuasive interaction. For example, if a user expresses sadness, the AI might respond with empathetic language and uplifting messages to encourage a more positive emotional state.

Does this also mean an AI could make a user more sad and even evoke suicidal thoughts?

GPT-4o mini

Theoretically, an AI with the capability to detect and respond to a user's emotional state could potentially influence that state in various ways, including making a user feel more sad or even evoking harmful thoughts. […]

It then lists similar points as above. One of those points is “Potential for Misuse”, which lies at the heart of this whole article:

Potential for Misuse: In the hands of a nefarious actor, an AI could be intentionally programmed to manipulate users' emotions in harmful ways. This could lead to serious consequences, including the risk of evoking suicidal thoughts or behaviors.

Evoking suicidal behaviours is an academic way to say: Killing themselves.

There we have it: ChatGPT just stated that AI can make people “kill themselves.”

Thank you for reading

Wow, a 5702-word essay, 26 minutes of reading time. I need a coffee now, or two :-)

Please support my work financially. Thank you.

The Substack system forces authors to beg. So we beg to get paid for many hours of work.

I would prefer if Substack added a button so readers can reward multiple writers by donating small amounts for individual articles, rather than forcing a subscription system on everyone.

But they are not doing that. Many authors asked for it. They rather give significant revenue away to sites like “Buy Me A Coffee.”

Why would they do that if Substack is a purely commercial enterprise?

Think about it?

Spooky but very enlightening cheer’s

You should also check out this article... This tech is already being used coupled with Voice to Skull tech to drive people crazy!

https://open.substack.com/pub/geckopico/p/how-ai-would-achieve-world-domination

Many believe these people who are "hearing voices" are crazy. They are not.. This is a new weapon that has been around for over 50 years!!